Kafka

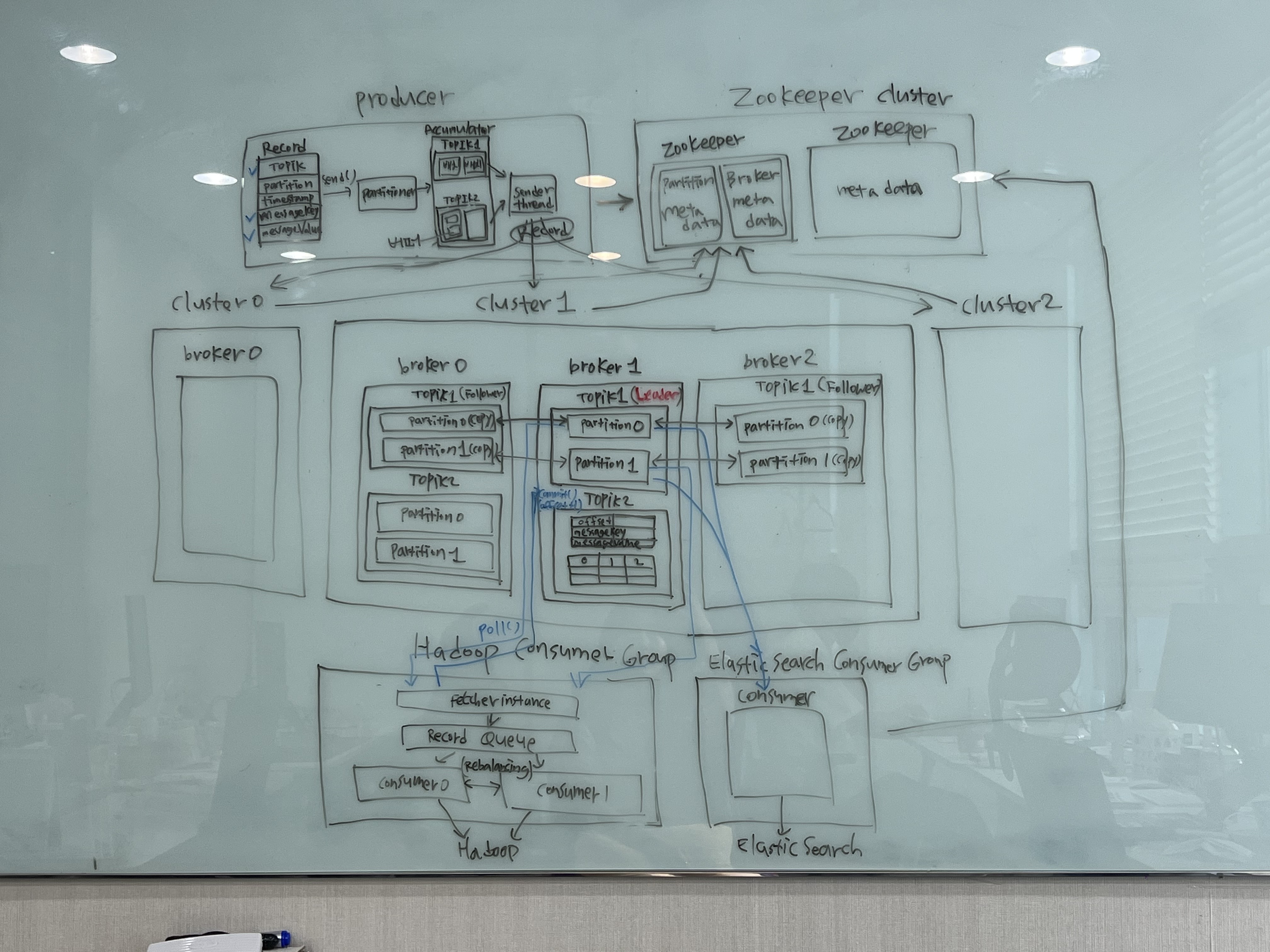

Structure

Record < Partition < Topic < Broker < Cluster

Topic- 메시지를 구분하는 논리적인 단위

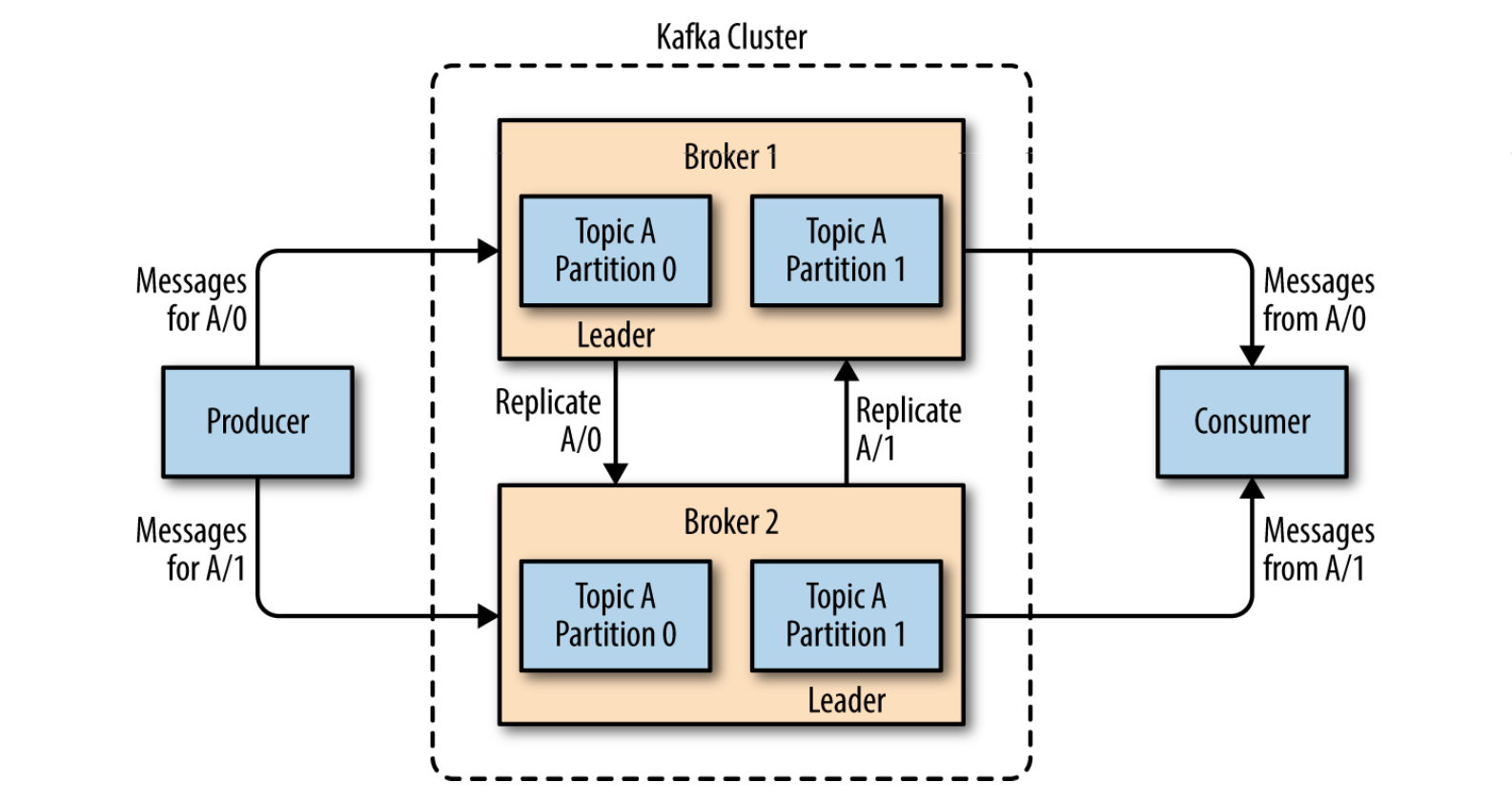

Partition- 모든 토픽은 각각 대응하는 하나 이상의 파티션이 브로커에 구성되고, 발행되는 토픽 메시지들은 파티션들에 나뉘어 저장됨.

- 하나의 토픽에 대하여 여러 파티션을 구성하는 가장 큰 이유 : 분산 처리를 통한 성능 향상

- 하나의 파티션 내에서는 메시지 순서가 보장

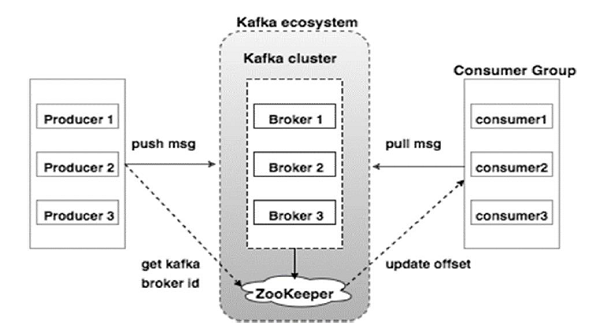

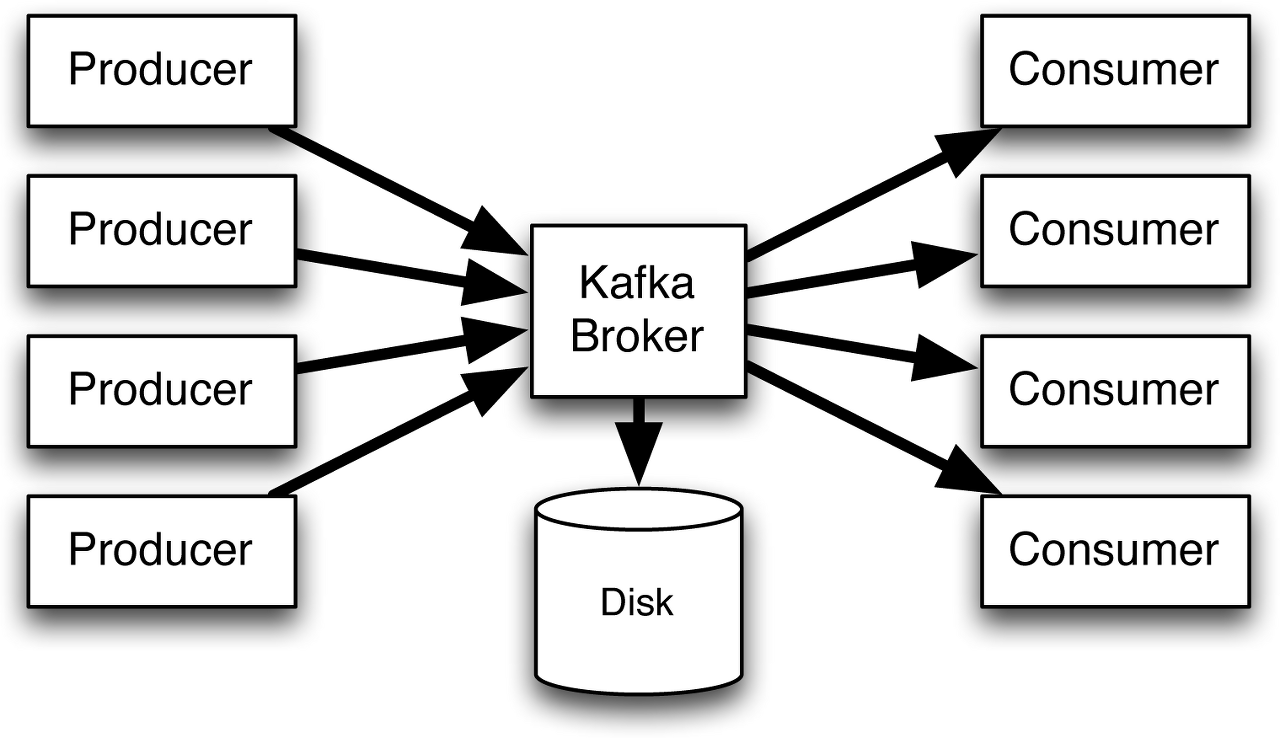

Broker- 카프카 브로커는 프로듀서와 컨슈머 사이에서 메시지를 중계 카프카 브로커가 일반적으로 ‘카프카’라고 불리는 시스템임. 프로듀서와 컨슈머는 별도의 애플리케이션으로 구성되는 반면, 브로커는 카프카 자체이기 때문입니다. 따라서 ‘카프카를 구성한다’ 혹은 ‘카프카를 통해 메시지를 전달한다’에서 카프카는 브로커를 의미.

![imgg]()

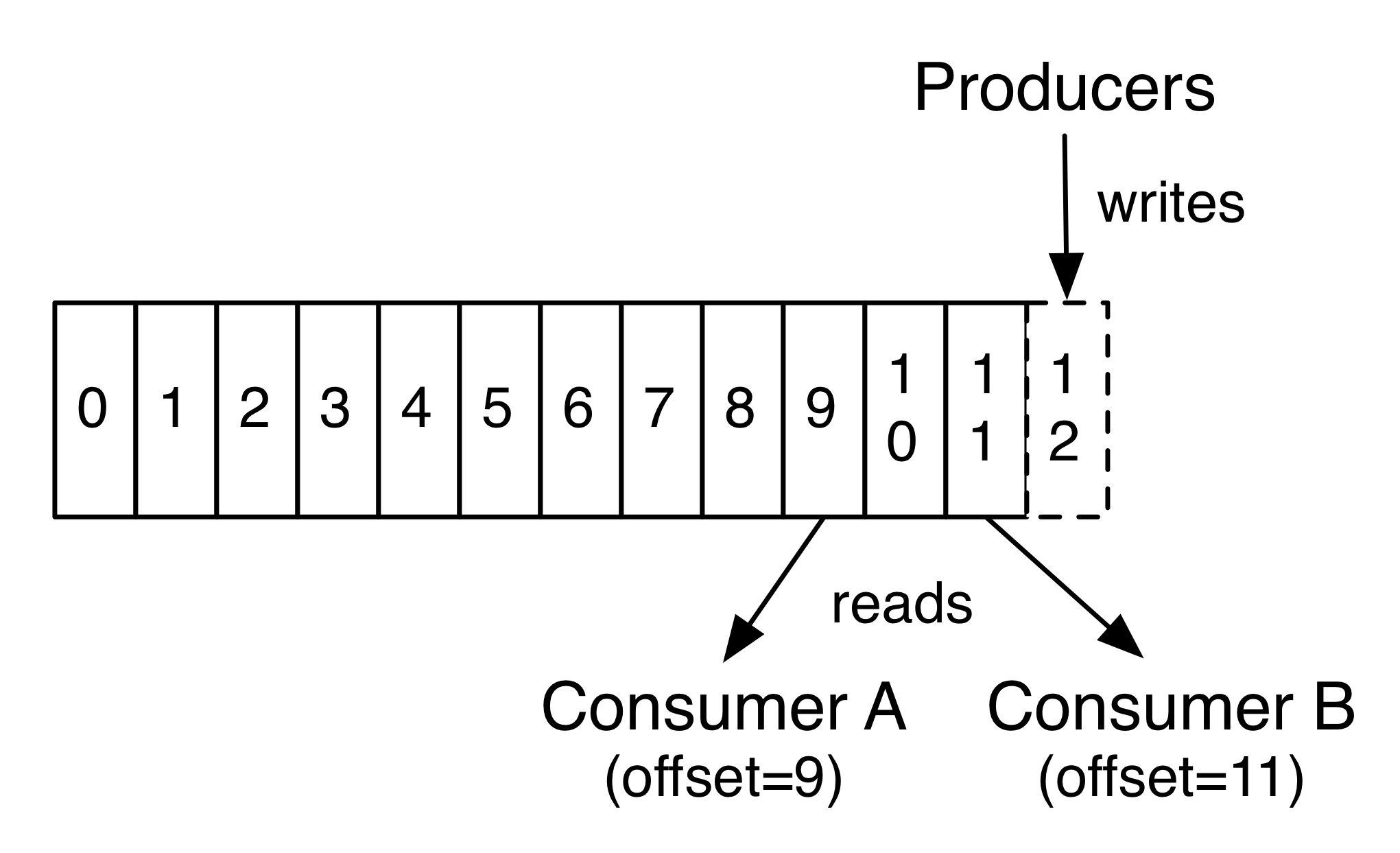

Producer,Consumer- Producer는 오직 끝에만 쓰며, Consumer는 오프셋을 기준으로 차례차례 읽어나감.

Topics- a particular stream of data

- you can have as many topics as you want

- a topic is identified by its name

- Topics are split in partitions

- Each partition is ordered

- Each message within a paritition gets an incremental id, called offset

- 동일한 토픽의 메시지들은 논리적으로 같은 문맥(context)을 가집

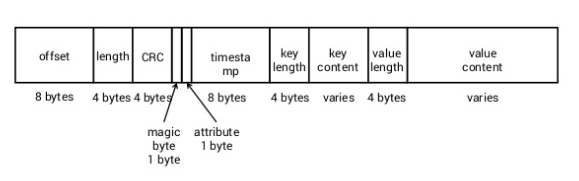

Message![message]()

- Key(키)와 Value(값)로 구성

- 브로커를 통해 메시지가 발행되거나 소비될 때, 메시지 전체가 직렬화/역직렬화됨

- 특정한 구조인 스키마(schema)를 가짐 > 프로듀서가 발행하고 컨슈머가 소비할 때 메시지를 적절하게 처리하기 위해 필요 (만약 프로듀서와 컨슈머가 메시지에 대한 서로 다른 스키마를 가지고 있다면, 정상적인 처리를 할 수 없음)

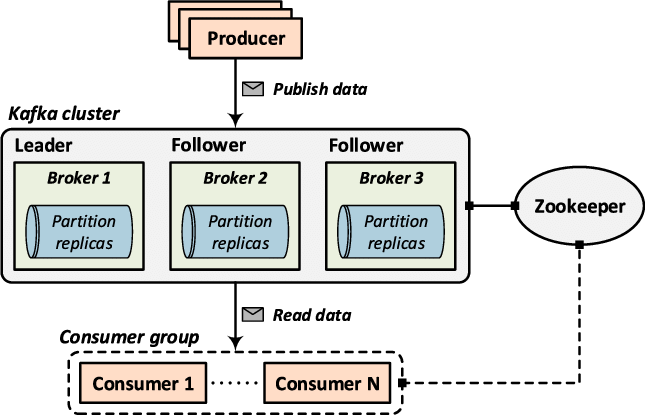

Replica- 서비스 안정성과 장애 수용(Fault-Tolerance)에 관한 요소

- 하나의 파티션은 1개의 리더 레플리카와 그 외 0개 이상의 팔로어 레플리카로 구성됨. 리더 레플리카는 파티션의 모든 쓰기, 읽기 작업을 담당. 반대로 팔로어 레플리카는 리더 레플리카로 쓰인 메시지들을 그대로 복제하고, 만약 리더 레플리카에 장애가 발생하는 경우, 리더 자리를 승계받을 준비를 함. 참고로 승계받을 준비가 된 즉, 리더 레플리카의 메시지를 적절하게 복제하여 리더 레플리카와 동기화된 레플리카들의 그룹을 ISR(In-Sync Replica)라고 함.

- Replication-factor

Offset- only have ameaning for a specific partition

- Ex. offset3 in partition0 doesn’t represent the same data as offset3 in partition1

- Order is guaranteed only within a partition (not across paritions)

Data (Message / Record)

- Data is kept only for a limited time (default is one week)

- once the data is written to a partition, it’t be changed (immutabiltiy)

- Data is assigned randomly to a partition unless a key is provided (more on this later)

necessary : offset, messageKey, messageValue

- reference : https://always-kimkim.tistory.com/entry/kafka101-message-topic-partition

Features

- good solution for large scale message processing applications

- better throughput, built-in partitioning, replication, and fault-tolerance, horizontal scalabiltiy

- messaging uses are often comparatively low-throughput

- but may require low end-to-end latency and often depend on the strong durability guarantees (Kafka can provide)

- kafka can scale to 100s of brokers

- kafka can scale to milions of messages per second

- high performance (latency of less than 10ms) - real time

- for the log history

- for decoupling of data streams & systems

Use cases

- messageing system

- activity tracking

- gather metrics from many different locations

- application logs gathering

- stream processing (with the kafka stream API or Spark for example)

- De-coupling of system dependencies

Integration with Spark, Flink, Storm, Hadoop and many other Big Data technologies

- key point :

in real-time

Kafka VS RabbitMQ

| Kafka | RabbitMQ | |

|---|---|---|

| distributed event streaming platform | open source distributed message broker | |

| pub/sub (생산자가 원하는 각 메시지를 게시할 수 있도록 하는 메시지 배포 패턴) | message broker (응용프로그램, 서비스 및 시스템이 정보를 통신하고 교환할 수 있도록 하는 소프트웨어 모듈) | |

| 복잡한 라우팅에 의존하지 않고 최대 처리량으로 스트리밍하는 데 가장 적합, 다단계 파이프라인에서 데이터를 처리 | 복잡한 라우팅, 신속한 요청-응답이 필요한 웹 서버에 적합 |

- reference : https://coding-nyan.tistory.com/129

- reference : Apache Kafka in 5 minutes

https://www.youtube.com/watch?v=PzPXRmVHMxI Apache Kafka in 6 minutes https://www.youtube.com/watch?v=Ch5VhJzaoaI

https://always-kimkim.tistory.com/entry/kafka101-broker

Internal/External Structure

Example

build.gradle

1

2

3

dependencies {

implementation: "spring-kafka"

}

application.yml

1

2

3

spring:

kafka:

bootstrap-servers: localhost:9092

KafkaTopicConfig.java

1

2

3

4

5

6

7

8

@Configuration

public class KafkaTopicConfig {

@Bean

public NewTopic amgifoscodeTopic() { // NewTopic : org.apache.kafka.clients.admin.NewTopic

return TopicBuilder.name("amigoscode").build();

}

}

Output

- Terminal

- https://kafka.apache.org/quickstart + additional command : https://sangchul.kr/144

1

2

3

4

5

6

7

8

9

10

11

cd kafka_2.12-3.2.1

bin/zookeeper-server-start.sh config/zookeeper.properties

bin/kafka-server-start.sh config/server.properties

// Create a topic

bin/kafka-topics.sh --create --topic quickstart-events --bootstrap-server localhost:9092

// Read the events (topic --> amigoscode)

bin/kafka-console-consumer.sh --topic amigoscode --from-beginning --bootstrap-server localhost:9092

KafkaProducerConfig.java

- Use

KafkaTemplatefor implemeting producer- KafkaProducer.send() in KafkaTemplate.send()

- reference : https://jessyt.tistory.com/142

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26

@Configuration public class KafkaProducerConfig { @Value("${spring.kafka.bootstrap-servers}") private String bootstrapServers; public Map<String, Object> producerConfig() { Map<String, Object> properties = new HashMap<>(); properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers); properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class); properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class); return properties; } @Bean public ProducerFactory<String, String> producerFactory() { return new DefaultKafkaProducerFactory<>(producerConfig()); } @Bean public KafkaTemplate<String, String> kafkaTemplate(ProducerFactory<String, String> producerFactory) { return new KafkaTemplate<>(producerFactory); } // KafkaTemplate<String, Object> ~~~ }

KafkaConsumerConfig.java

Use

ConcurrentKafkaListenerContainerFactoryfor implementing consumerreference : https://semtax.tistory.com/83

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

@Configuration

public class KafkaConsumerConfig {

@Value("localhost:9092")

private String bootstrapServers;

public Map<String, Object> consumerConfig() {

Map<String, Object> properties = new HashMap<>();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringSerializer.class);

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringSerializer.class);

return properties;

}

@Bean

public ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(consumerConfig());

}

@Bean

public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String,String>> factory(ConsumerFactory<String, String> consumerFactory) {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory);

return factory;

}

// ConcurrentKafkaListenerContainerFactory<String, Object> ~~~

}

KafkaApplication.java

1

2

3

4

5

6

7

8

9

10

11

12

13

14

@SpringBootApplication

public class KafkaexampleApplication {

public static void main(String[] args) {

SpringApplication.run(KafkaexampleApplication.class, args);

}

@Bean

CommandLineRunner commandLineRunner(KafkaTemplate<String, String> kafkaTemplate) {

return args -> {

kafkaTemplate.send("amigoscode", "hello kafka"); // topic, data(message)

};

}

}

- KafkaTemplate.send()

- it goes through different layers before the message is sent to Kafka.

KafkaListeners.java

1

2

3

4

5

6

7

@Componenet

public class KafkaListeners {

@KafkaListener(topics = "amigoscode", groupId = "groupId")

void listener(String data) {

System.out.println("Listener received: " + data );

}

}

MessageRequest.java

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

// record

public record MessageRequest(String message) {

}

=

// class

// Records provide a public constructor (with all arguments), read methods for each field (equivalent to getters) and the implementation of hashCode, equals and toString methods.

public class MessageRequest {

private String message;

public MessageRequest() {

}

public MessageRequest(String message) {

}

public String getMessage() {

return message;

}

public boolean equals(Object o) {

return true;

}

public int hashCode() {

return 0;

}

public String toString() {

return "";

}

}

MessageController.java

1

2

3

4

5

6

7

8

9

10

11

12

13

14

@RestController

@RequestMapping("api/vi/messages")

public class MessageController {

private KafkaTemplate<String, String> kafkaTemplate;

public MessageController(KafkaTemplate<String, String> kafkaTemplate) {

this.kafkaTemplate = kafkaTemplate;

}

@PostMapping

public void publish(@RequestBody MessageReqeust request) {

kafkaTemplate.send("amigoscode", request.getMessage());

}

}

Output

API TEST

1

2

3

4

5

6

POST http://localhost:8080/api/vi/messages

Content-Type: application/json

{

"message": "Api With Kafka"

}

Output

- reference :

Apache Kafka in 5 minutes https://www.youtube.com/watch?v=PzPXRmVHMxI

Kafka Tutorial - Spring Boot Microservices https://www.youtube.com/watch?v=SqVfCyfCJqw

Kafka Topics, Partitions and Offsets Explained https://www.youtube.com/watch?v=_q1IjK5jjyU

Kafka Stream

kafka streams api를 사용하여, 지속적으로 흘러들어오는 데이터에 대한 분석, 처리를 위한 client library

어떤 Topic으로 들어오는 데이터를 Consume하여, streams api를 통해 처리 후 다른 Topic으로 전송(Producing) 하거나 끝내는 행위

Spring cloud stream에서 제공하는 Binder라는 구현체를 중간에 두고 통신하기 때문에, 어느 하나의 미들웨어에 강결합 되어있지 않은 상태에서 애플리케이션을 개발

| binder | bindings(input/output) | |

|---|---|---|

| 메시지 broker 정보 | 메세지를 전송할 채널정보 | |

| 미들웨어와의 통신을 담당하는 컴포넌트 | 미들웨어와 통신을 위한 브릿지 | |

| 미들웨어(kafka)와 producer 및 consumer의 연결, 위임 및 라우팅 등을 담당 | 바인더의 입/출력을 미들웨어(kafka)에 연결하기 위한 Bridge |

Example

build.gradle

1

2

3

dependencies {

implementation 'org.springframework.cloud:spring-cloud-starter-stream-kafka'

}

Default interface

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

public interface Sink {

String INPUT = "process-input"; // INPUT : consumer 입장에서 subscribe 받을 TOPIC명

@Input(INPUT)

SubscribableChannel input();

}

public interface Source {

String OUTPUT = "process-output"; // OUTPUT : producer 입장에서 publish할 TOPIC명

@Output(OUTPUT)

MessageChannel output();

}

public interface Processor extends Source, Sink {

}

Custom interface

1

2

3

4

5

6

7

8

9

10

public interface ProcessMessage {

String SEND_MESSAGE = "send-message";

String RECEIVE_MESSAGE = "receive-message";

@Output(SEND_MESSAGE)

MessageChannel sendMessage();

@Input(RECEIVE_MESSAGE)

SubscribableChannel getMessage();

}

1

2

3

4

5

6

7

8

9

@ConditionalOnProperty(name = "spring.cloud.stream.enabled", havingValue = "true", matchIfMissing = true)

@EnableBinding(EventSource.class)

public class MessageConsumer {

@StreamListener(target = ProcessMessage.RECEIVE_MESSAGE, condition = "headers['eventType'] == 'MessageEvent'")

public void pushMessage(MessageEvent event) throws JsonProcessingException {

...

}

}

1

2

3

public class MessageEvent {

// props

}

application.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

spring:

cloud:

stream:

enable:

kafka:

binder:

brokers: localhost // broker ip

# replication-factor: 2 // minimum = 2

# auto-create-topics: false

bindings: // input/output

receive-message: // channel name

destination: "${spring.cloud.stream.topic:}process-input" // topic name

group: "${spring.cloud.stream.consumer.group:}" // consumer group id

send-message: // output

destination: "${spring.cloud.stream.topic:}process-output"

Related Annotations

@EnableBinding

1

@EnableBinding(EventSource.class)

@KafkaListener

1

@KafkaListener(topics = "amigoscode", groupId = "groupId")

@ConditionalOnProperty(…)

enables bean registration only if an environment property is present and has a specific value.

1

@ConditionalOnProperty(name = "spring.cloud.stream.enabled", havingValue = "true", matchIfMissing = true)

- condition : spring.cloud.stream.enabled:true (in application.yml)

- name (=value) : key in application.yml

- havingValue : value of key in application.yml

- matchIfMissing : whether create a bean even if not matching

@ConditionalOnMissingBean

- reference : https://saramin.github.io/2019-08-28-2/

https://piotrminkowski.com/2021/11/11/kafka-streams-with-spring-cloud-stream/

http://www.chidoo.me/index.php/2016/11/06/building-a-messaging-system-with-kafka/

https://jaehun2841.github.io/2019/12/23/2019-12-23-kafka-streams-binder-feature/#Version-Up

https://sangchul.kr/144

Comments powered by Disqus.